窟组词(窟组词100个)

alicucu 2025-12-21 21:48 1 浏览

石窟,赌窟,狡兔三窟,龙潭虎窟窟拼音:kū部首:穴 笔画数:13 结构:上下结构 造字法:形声;从穴、屈声 笔顺读写:捺捺折撇捺折横撇折竖竖折竖 释义:

①(名)洞穴:石~|山~|狡兔三~。

②(名)坏人聚集做坏事的场所:匪~|盗~|赌~。

组词造句:窟窿5、树枝想去撕裂天空,但却只戳了几个微小的窟窿,它透出了天外的光亮,人们把它叫作月亮和星星。

6、苹果上布满虫子咬过的小窟窿,像长满了可爱的小眼睛。

组词是窟窿。

造句

我的裤子突然破了一个窟窿,真的是让我感觉尴尬到了极点。

这个窟窿是自己从来没有见过的,好像又是昨天老鼠的贡献。

组词造句:窟窿3、韦尔森顺着哭声走过去,原来是一个雪窟窿里发出来的。

4、她又一次想笑,一个干巴巴的老头,穿着满是窟窿的汗衫,在客厅里与一条鱼搏斗,这场面她好像头一次见。

窟的组词 : 窟窿、 魔窟、 巢窟、 窟宅、 窟穴、 石窟、 龛窟、 地窟、 桂窟、 窟窡、 窟栊、 盗窟、 鱼窟、 艳窟、 赌窟、 窟臀、 鬼窟、 窟薮、 鼍窟、 玉窟、

1、窟读音:kū。解释:洞穴:狡兔三窟;某类人聚集或聚居的场所:匪窟。

2、组词:石窟:山岩上的洞。

3、窟宅:指神怪的居处。

4、巢窟:栖居或藏身洞穴。

5、魔窟:妖魔鬼怪的老窝,比喻邪恶势力盘踞之处。

相关推荐

- 奥迪a5敞篷二手车价格(奥迪a5敞篷二手车价格及图片)

-

当然可以卖,奥迪A5敞篷车是一款高端豪华车,有着出色的品质和性能,造型时尚,驾乘感受非常舒适。对于喜欢敞篷车款的消费者来说,这是一款非常吸引人的选择。对于卖家来说,如果车辆的整体情况和里程数符合市场需...

- cia和fbi哪个权力大(cia和fbi谁更牛)

-

确切来说这两个机构其实没有什么可比性,因为CIA隶属于国家安全委员会,主要任务是公开和秘密地收集外国政治、文化、科技等情报,协调国内各情报机构的活动,向总统和国家安全委员会提供报告。FBI是隶属于美...

- 好看的武打片(好看的武打片老电影推荐)

-

那个年代,不只是邵氏,嘉禾等公司的电影也都不错,我一起都列出来吧:独臂刀、刺马、水浒传、十三太保、十八般武艺、少林搭棚大师、残缺、五毒、三少爷的剑、天涯明月刀、多情剑客无情剑、马永贞、刺马、上海滩十三...

- 中国历史朝代时间表多少年(中国历史朝代年历表)

-

(1)大宋帝国:960年至1279年,存在时间长达320年,期间官员廉洁奉公,百姓富裕安乐,社会路不拾遗夜不闭户,堪称中国统治最长盛世最长的黄金时代。(2)大清帝国:1616年至1911年,存在295...

- 苏泊尔电饭煲e0故障(苏泊尔电饭煲e0故障维修视频)

-

显示e0是传感器开路故障。先拆开顶部检查温度传感器连接线是否断路,通常这个部件由于经常使用,而且材质都不好,导致温感器的连接线断裂。如果是线路断裂,则找一根相仿的电线连接断裂部分,并用电工胶带做好绝缘...

- 最恐怖的台风第一名(世界最恐怖的台风)

-

是威马逊台风。威马逊台风于2014年7月登陆我国的海南省文昌市,这个超强台风自登陆上岸后,就打破了之前2006年的桑美台风的记录,成为了我国建国以来强的超强台风,直接导致南宁这个城市发生海变,城市内部...

- 干燥剂有哪几种(食品干燥剂有哪几种)

-

1、酸性干燥剂:浓硫酸、P2O5、硅胶浓硫酸(强氧化性酸)、P2O5(酸性白色粉末)、硅胶(它是半透明,内表面积很大的多孔性固体,有良好的吸附性,对水有强烈的吸附作用。含有钴盐的硅胶叫变色硅胶,没有吸...

- 家用直饮水机(家用直饮水机十大名牌)

-

1、看直饮机是否有卫生许可证批件上所写的产品名字、型号要一致,因为即使在有证企业中,也存在“一证多用”的情况,即出现多个产品型号用一个卫生许可批件,消费者一定要查看清楚。2、看直饮机滤芯是否合适第一代...

- 滚筒洗衣机排行榜2025前十名

-

各有好处。1,上排水排的并不彻底,水泵处会有积水,要是放在特别冷的地方就需要在每次洗完衣服后动手给水泵排水。但也不会影响洗衣效果,因为有单向阀门限制水流向。但上排水在布置排水管时简单,上下都能排。2,...

- 世界美女排行榜前一百(世界美女排行榜100名2019)

-

2021年即将进入尾声,美国网站TCCandler每年都会选出全球百大美女排行榜,如今官方名单出炉,今年的第一名是韩国女团BLACKPINK的成员Lisa,去年是第二名。EmilieNereng今...

-

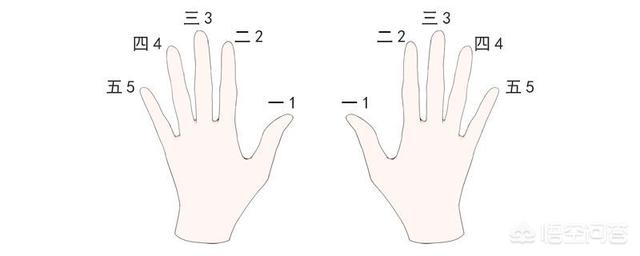

- 电子琴1234567指法图(1234567手势图)

-

尤克里里1234567的指法三弦空品是1,三弦二品是2,二弦空品是3,二弦一品是4,二弦三品是5,四弦空品也是5,一弦空品是6,四弦二品也是6,一弦二品是7。在弹奏尤克里里的时候要注意,手型是使用的古典手型,左手的一指左侧按琴弦,其余的手指...

-

2025-12-24 05:12 alicucu

- 长沙房产网(长沙房产网房天下)

-

长沙房地产公司排名1、湖南鑫远投资集团有限公司2、红星实业集团有限公司3、中建信和地产有限公司4、恒大地产集团长沙置业有限公司5、湖南保利房地产开发有限公司6、湖南天城置业发展有限公司7、长沙龙湖房地...

- 电子烟(电子烟有尼古丁吗)

-

电子烟是由中国人发明的,这个毋庸置疑。多数资料及实验显示。电子烟除尼古丁之外没有发现其他任何有害成分,对人体的不利影响也低于传统香烟。原材料不同:电子烟是微电子科技产品,有雾化器、锂电池和烟弹。目前市...

- 今年最流行的头发造型(今年最流行的头发造型和颜色)

-

时尚流行和个人喜好是选择头发颜色时需要考虑的因素,不过每年的流行趋势都会有所不同,以下列举几种近年来比较受欢迎的头发颜色:1.灰棕色:灰棕色是一种既低调又独特的中性色调,适合许多不同的肤色和发质,也非...

- 榴莲吃不完冷藏还是冷冻(榴莲冷冻的正确方法)

-

吃不完榴莲肉大多数可以冷冻,冷冻过后可能口感上会有一些差异,但是里面营养物质并不会流失,可以在吃时候解冻。但是避免长时间的冷冻,也会导致营养价值降低。榴莲的保存一般是冷冻比较好,而且可以存放的时间比较...

- 一周热门

- 最近发表

- 标签列表

-